Beyond Accuracy: The Hidden Power of Metrics & Tools in AI Development

Patricia Butina

Marketing Specialist

Published:

March 21, 2025

Topic:

Insights

In the rapidly evolving landscape of artificial intelligence (AI), Large Language Models (LLMs) have emerged as key components in various applications, from customer service and content creation to scientific research and translation. The effectiveness of these models is deeply intertwined with the metrics and tools used to evaluate them. As the adage goes, "what gets measured gets improved," and in the context of LLMs, this principle is more pertinent than ever. The choice of evaluation metrics and tools can significantly impact the performance, reliability, and ethical alignment of LLMs, making it a critical decision for developers and stakeholders.

Standard Metrics: Understanding Their Nuances

Traditional metrics for evaluating natural language generation have been widely adopted but come with specific limitations and advantages:

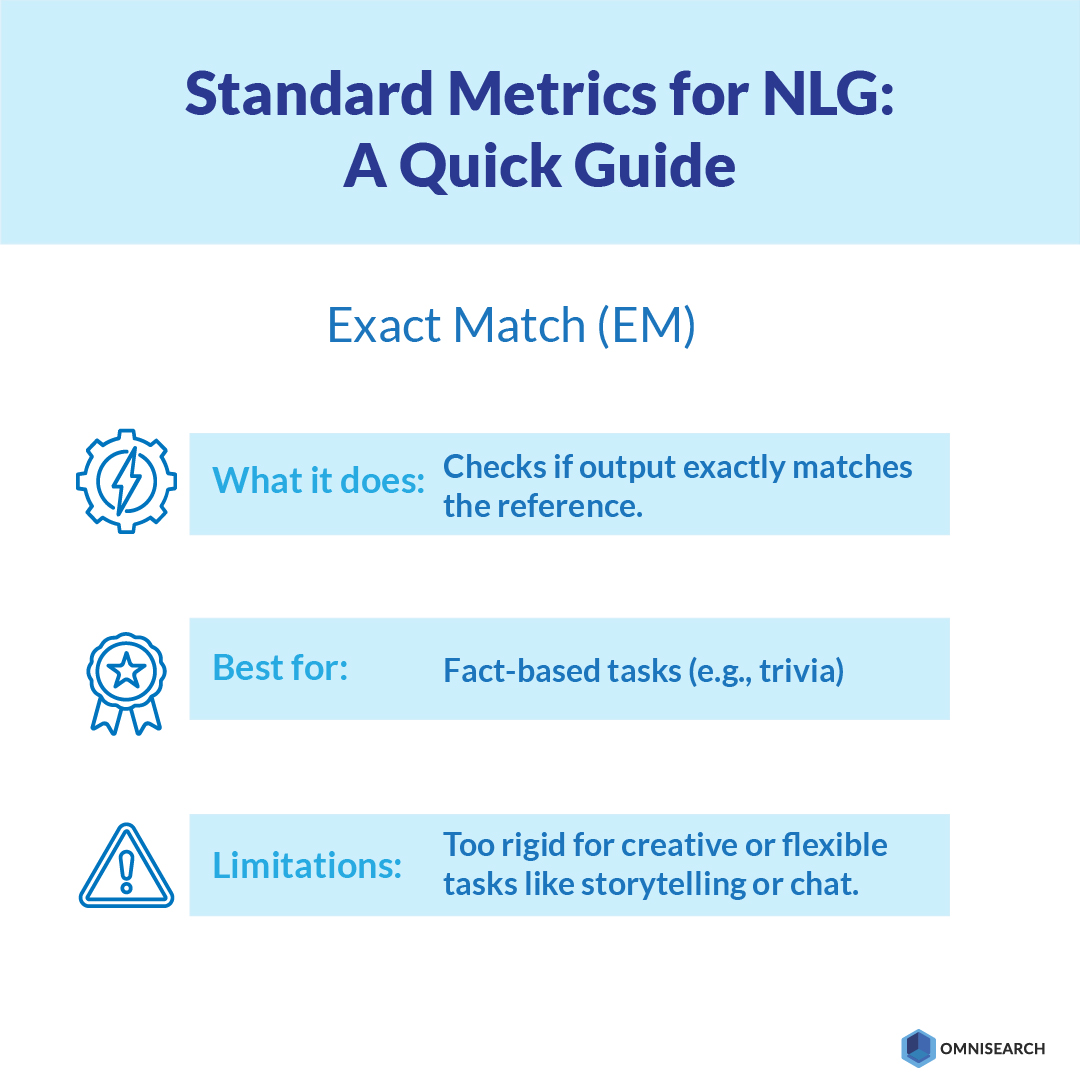

- Exact Match (EM): Ideal for fact-based tasks, EM measures the exact alignment between the model's output and the expected result. However, it falls short in tasks requiring creativity or flexibility, where the model needs to generate novel or contextually appropriate responses. For instance, in trivia question answering, EM is effective, but in creative writing or conversational AI, it may not capture the full range of acceptable outputs.

- BERTScore: Utilizes BERT embeddings to assess semantic similarity, making it suitable for creative or conversational outputs where context alignment is crucial. Unlike word-matching metrics, BERTScore rewards understanding and context alignment, making it invaluable for tasks that require nuanced language understanding.

- BLEU (Bilingual Evaluation Understudy): Effective in machine translation by comparing n-gram overlaps, but it penalizes stylistic variations, limiting its use in tasks requiring natural-sounding language. BLEU is excellent for structured text but struggles with tasks that demand flexibility in expression.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Primarily used for summarization tasks, ROUGE evaluates the overlap of words or phrases between candidate and reference summaries. While scalable and simple to implement, it may overemphasize lexical similarity over semantic quality, potentially overlooking the nuances of meaning.

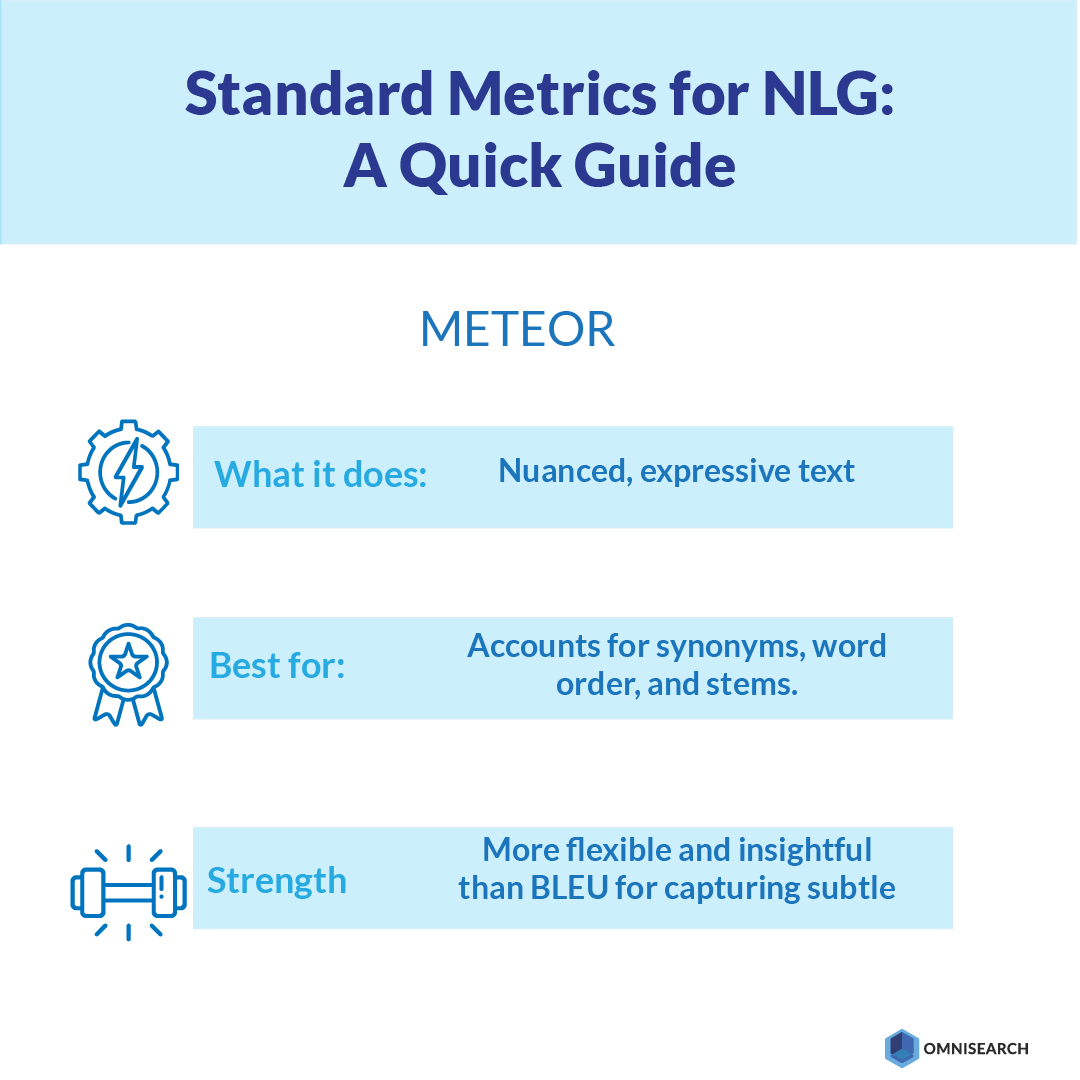

- METEOR: Offers a more nuanced evaluation by accounting for synonyms, stemming, and word order, making it more suitable for nuanced outputs compared to BLEU. METEOR provides a more forgiving and insightful assessment, particularly useful in tasks where the model needs to convey complex ideas or subtle differences in meaning

Task-Specific Metrics: Customization for Enhanced Evaluation

Beyond standard metrics, task-specific evaluation is essential for optimizing LLM performance. For instance, in summarization tasks, custom metrics might assess whether the summary contains sufficient information from the original text and whether it includes contradictions or hallucinations. These metrics are quantitative, reliable, and accurate, aligning with human expectations to ensure the LLM meets specific use-case requirements.

In creative writing or conversational AI, metrics might focus on coherence, fluency, and engagement, using human evaluators or automated tools to assess these subjective qualities. The goal is to create a comprehensive evaluation framework that captures both the technical accuracy and the human-centric aspects of language generation.

Tools and Libraries: Automating Evaluation Processes

The automation of LLM evaluation has become indispensable, with tools and platforms playing a crucial role in streamlining and scaling the process:

- AI Testing and Validation Tools: Platforms like RagaAI offer comprehensive pipelines for testing, benchmarking, and validating LLM performance, akin to Continuous Integration/Continuous Deployment (CI/CD) for software development. These tools standardize AI assurance workflows, ensuring that models are rigorously tested and validated before deployment.

- AI Observability Platforms: Provide real-time insights into model behavior, enabling teams to debug outputs, monitor performance, and optimize models for specific use cases. Observability isn't just about identifying errors; it's also about understanding how models interact with users and adapt to changing contexts.

Cross-Model Evaluation: Leveraging LLM Feedback Loops

A novel approach in LLM evaluation involves using one model to evaluate another, akin to Reinforcement Learning with Human Feedback (RLHF), but with another LLM as the "human" evaluator. This method offers several advantages:

- Scalability: Human feedback doesn't scale, especially when models generate billions of tokens daily. LLMs, on the other hand, can be evaluated at machine speed, allowing for rapid iteration and improvement.

- Contextual Insight: High-quality LLMs are adept at understanding subtlety and nuance, often outperforming traditional rule-based evaluation metrics in subjective or creative tasks. They can assess coherence, relevance, and engagement more effectively than metrics like BLEU or ROUGE.

- Consistency: Unlike humans, LLMs don't get tired or inconsistent, ensuring uniform evaluation standards across large datasets. This consistency is crucial for maintaining high-quality outputs and ensuring that models meet specific performance benchmarks.

Future Directions: Task-Specific Evaluation Stacks

The future of LLM evaluation lies in building task-specific stacks that combine traditional metrics with semantic tools and cross-model strategies. This approach ensures that LLMs deliver value across diverse applications, from solving customer queries to generating creative content. The stakes are high, but with the right tools and metrics, developers can create AI systems that are not only effective but also trustworthy and aligned with ethical standards.

Conclusion

In conclusion, the evaluation of LLMs is no longer a secondary consideration but a foundational aspect of AI development. As AI continues to integrate into various sectors, the choice of evaluation metrics and tools will be pivotal in ensuring that these models meet the high standards of performance, reliability, and ethical responsibility required in today's digital landscape. By leveraging a combination of traditional metrics, task-specific evaluation frameworks, and innovative cross-model strategies, developers can unlock the full potential of LLMs and drive innovation in AI applications.

Moreover, the integration of AI observability platforms and testing tools will continue to play a crucial role in refining model performance and ensuring that LLMs adapt effectively to changing user needs and technological advancements. The future of AI is deeply intertwined with the sophistication and accuracy of its evaluation processes, and it is up to developers and researchers to harness these tools to create AI systems that are both powerful and responsible.